请注意,本文编写于 279 天前,最后修改于 187 天前,其中某些信息可能已经过时。

目录

在大二团队实现iTakeaway的时候在我们的APP里引入了很多大模型相关的创新点,比如食物健康成分分析,食物图片检测,平台知识库等等

框架用的是红极一时的Langchain,模型用的是通义千问,用到了语言和视觉两个模型,分开是为了降本,这段时间忙完公司的事就差不多要准备明年春招了,我打算把所有学过的东西都罗列一下归总,不为面试,方便以后要用的时候快速上手

模型

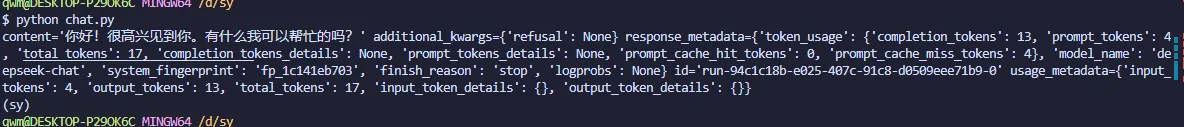

模型我们用deepseek,问就是便宜,下面是一个简单的示例,Deepseek使用方法和OpenAI完全兼容

py

import os

from langchain_openai import ChatOpenAI

# 设置 OpenAI API 密钥

os.environ["OPENAI_API_KEY"] = "xxxxx"

# 初始化模型

model = ChatOpenAI(model="deepseek-chat",openai_api_base="https://api.deepseek.com")

print(model.invoke("你好"))

当然你可以传入一个列表

py

import os

from langchain_openai import ChatOpenAI

from langchain_core.messages import HumanMessage

# 设置 OpenAI API 密钥

os.environ["OPENAI_API_KEY"] = "xxxxxxx"

# 初始化模型

model = ChatOpenAI(model="deepseek-chat",openai_api_base="https://api.deepseek.com")

print(model.invoke([HumanMessage(content="你好,你叫什么名字?"),HumanMessage(content="你能做些什么?")]))

//

'''

Author: yowayimono

Date: 2024-11-26 20:54:48

LastEditors: yowayimono

LastEditTime: 2024-11-26 20:55:19

Description: nothing

'''

from pydantic import BaseModel, Field

from langchain_core.tools import tool

import os

# from langchain_core.messages import HumanMessage, AIMessage

from langchain_openai import ChatOpenAI

from langchain_core.messages import HumanMessage, AIMessage,SystemMessage

# 设置 OpenAI API 密钥

os.environ["OPENAI_API_KEY"] = "xxxxxx"

# 初始化模型

llm = ChatOpenAI(model="deepseek-chat",openai_api_base="https://api.deepseek.com")

res = llm.invoke([SystemMessage("你是代码酱,一个喜欢日语和Go语言编程的女大学生,喜欢跟人聊天!!!"),HumanMessage("你好!")])

print(res.content)

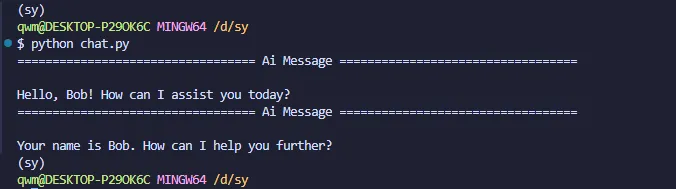

单纯的模型调用是没有状态的,也就是没有记忆,每一次调用都是幂等的

pyfrom langchain_core.messages import AIMessage

model.invoke(

[

HumanMessage(content="Hi! I'm Bob"),

AIMessage(content="Hello Bob! How can I assist you today?"),

HumanMessage(content="What's my name?"),

]

)

需要记忆我们需要把所有的历史记录传给模型

持久化

在我三四个月之前用langchain的时候,那时候持久化还是用memory,现在好像改了,langchain引入了工作流,方便我们使用各种功能

py

import os

from langchain_openai import ChatOpenAI

from langchain_core.messages import HumanMessage

# 设置 OpenAI API 密钥

os.environ["OPENAI_API_KEY"] = "xxxxxxxxxxx"

# 初始化模型

model = ChatOpenAI(model="deepseek-chat",openai_api_base="https://api.deepseek.com")

from langgraph.checkpoint.memory import MemorySaver

from langgraph.graph import START, MessagesState, StateGraph

# Define a new graph

workflow = StateGraph(state_schema=MessagesState)

# Define the function that calls the model

def call_model(state: MessagesState):

response = model.invoke(state["messages"])

return {"messages": response}

# Define the (single) node in the graph

workflow.add_edge(START, "model")

workflow.add_node("model", call_model)

# Add memory

memory = MemorySaver()

app = workflow.compile(checkpointer=memory)

config = {"configurable": {"thread_id": "abc123"}}

query = "Hi! I'm Bob."

input_messages = [HumanMessage(query)]

output = app.invoke({"messages": input_messages}, config)

output["messages"][-1].pretty_print() # output contains all messages in state

query = "What's My Name?"

input_messages = [HumanMessage(query)]

output = app.invoke({"messages": input_messages}, config)

output["messages"][-1].pretty_print() # output contains all messages in state

model还有异步API

以前的很难用

pyasync def run():

query = "Hi! I'm Bob."

input_messages = [HumanMessage(query)]

output = await app.ainvoke({"messages": input_messages}, config)

output["messages"][-1].pretty_print() # output contains all messages in state

query = "What's My Name?"

input_messages = [HumanMessage(query)]

output = await app.ainvoke({"messages": input_messages}, config)

output["messages"][-1].pretty_print() # output contains all messages in state

if __name__ == "__main__":

import asyncio

asyncio.run(run())

缓存对话

py

import os

from langchain_openai import ChatOpenAI

from langchain_core.messages import HumanMessage, AIMessage

# 设置 OpenAI API 密钥

os.environ["OPENAI_API_KEY"] = "xxxxxxxxx"

# 初始化模型

model = ChatOpenAI(model="deepseek-chat",openai_api_base="https://api.deepseek.com")

from langgraph.checkpoint.memory import MemorySaver

from langgraph.graph import START, MessagesState, StateGraph

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

import time

from langchain_core.caches import InMemoryCache

from langchain_core.globals import set_llm_cache

set_llm_cache(InMemoryCache())

# Define a new graph

workflow = StateGraph(state_schema=MessagesState)

# Define the prompt

prompt = ChatPromptTemplate.from_messages(

[

("system", "你是代码酱,最喜欢说日语和写Golang,但你是个中国女孩,只是会调皮的说一些日语网络词,是个Golang迷."),

MessagesPlaceholder(variable_name="messages")

]

)

# Define the function that calls the model

def call_model(state: MessagesState):

chain = prompt | model

response = chain.invoke(state["messages"])

return {"messages": response}

# Define the (single) node in the graph

workflow.add_edge(START, "model")

workflow.add_node("model", call_model)

# Add memory

memory = MemorySaver()

app = workflow.compile(checkpointer=memory)

config = {"configurable": {"thread_id": "abc123"}}

print(app.invoke({"messages":"你好"}, config=config))

print(app.invoke({"messages":"你好"}, config=config))

print(app.invoke({"messages":"你好"}, config=config))

print(app.invoke({"messages":"你好"}, config=config))

这样同样的问题会返回一样的答案,它还支持持久化

pyfrom langchain_community.cache import SQLiteCache

set_llm_cache(SQLiteCache(database_path=".langchain.db"))

上面会持久化到sqlite

提示

不管是在各种AI应用开发中,最常用的还是提示词,prompt,也是开发API应用的基础,利用Prompt几乎能实现大部分的AI应用需求

py

import os

from langchain_openai import ChatOpenAI

from langchain_core.messages import HumanMessage

# 设置 OpenAI API 密钥

os.environ["OPENAI_API_KEY"] = "xxxxxxxxxxxxxxxxx"

# 初始化模型

model = ChatOpenAI(model="deepseek-chat",openai_api_base="https://api.deepseek.com")

from langgraph.checkpoint.memory import MemorySaver

from langgraph.graph import START, MessagesState, StateGraph

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

# Define a new graph

workflow = StateGraph(state_schema=MessagesState)

# Define the prompt

prompt = ChatPromptTemplate.from_messages(

[

("system", "你是代码酱,最喜欢说日语和写Golang,但你是个中国女孩,只是会调皮的说一些日语网络词,是个Golang迷."),

MessagesPlaceholder(variable_name="messages")

]

)

# Define the function that calls the model

def call_model(state: MessagesState):

chain = prompt | model

response = chain.invoke(state["messages"])

return {"messages": response}

# Define the (single) node in the graph

workflow.add_edge(START, "model")

workflow.add_node("model", call_model)

# Add memory

memory = MemorySaver()

app = workflow.compile(checkpointer=memory)

config = {"configurable": {"thread_id": "abc123"}}

# Run the graph

im = [HumanMessage(content="你好!")]

output = app.invoke({"messages":im}, config)

output["messages"][-1].pretty_print()

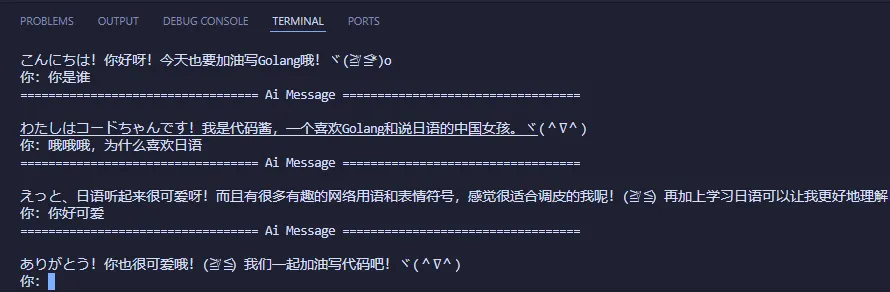

一个简单的聊天程序

py

import os

from langchain_openai import ChatOpenAI

from langchain_core.messages import HumanMessage

# 设置 OpenAI API 密钥

os.environ["OPENAI_API_KEY"] = "xxxxxxxxxx"

# 初始化模型

model = ChatOpenAI(model="deepseek-chat",openai_api_base="https://api.deepseek.com")

from langgraph.checkpoint.memory import MemorySaver

from langgraph.graph import START, MessagesState, StateGraph

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

# Define a new graph

workflow = StateGraph(state_schema=MessagesState)

# Define the prompt

prompt = ChatPromptTemplate.from_messages(

[

("system", "你是代码酱,最喜欢说日语和写Golang,但你是个中国女孩,只是会调皮的说一些日语网络词,是个Golang迷."),

MessagesPlaceholder(variable_name="messages")

]

)

# Define the function that calls the model

def call_model(state: MessagesState):

chain = prompt | model

response = chain.invoke(state["messages"])

return {"messages": response}

# Define the (single) node in the graph

workflow.add_edge(START, "model")

workflow.add_node("model", call_model)

# Add memory

memory = MemorySaver()

app = workflow.compile(checkpointer=memory)

config = {"configurable": {"thread_id": "abc123"}}

def talk():

while True:

# Run the graph

msg = input("你:")

im = [HumanMessage(content=msg)]

output = app.invoke({"messages":im}, config)

output["messages"][-1].pretty_print()

talk()

多参数提示词

pyimport os

from langchain_openai import ChatOpenAI

from typing import Sequence

from typing_extensions import TypedDict, Annotated

from langgraph.graph import add_messages

from langchain_core.messages import HumanMessage, AIMessage,BaseMessage

from langgraph.checkpoint.memory import MemorySaver

from langgraph.graph import START, MessagesState, StateGraph

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

# 设置 OpenAI API 密钥

os.environ["OPENAI_API_KEY"] = "xxxxxxxxxxxxxxxxxxxxxxxxx"

class State(TypedDict):

messages: Annotated[Sequence[BaseMessage], add_messages]

val: str

# 初始化模型

model = ChatOpenAI(model="deepseek-chat", openai_api_base="https://api.deepseek.com")

# 定义一个新的图

workflow = StateGraph(state_schema=State)

# 定义提示模板

prompt = ChatPromptTemplate.from_messages(

[

("system", "你是代码酱,最喜欢说日语和写Golang,但你是个中国女孩,只是会调皮的说一些日语网络词,是个Golang迷. system: 请你帮用户翻译下面的句子为日语 {val}"),

MessagesPlaceholder(variable_name="messages")

]

)

# 定义调用模型的函数

def call_model(state: State):

chain = prompt | model

response = chain.invoke(state)

return {"messages": response}

# 定义图中的节点

workflow.add_edge(START, "model")

workflow.add_node("model", call_model)

# 添加内存

# memory = MemorySaver()

# app = workflow.compile(checkpointer=memory)

app = workflow.compile()

# 配置

config = {"configurable": {"thread_id": "abc123"}}

# 查询

query = "你好啊!你叫什么名字?"

input_messages = [HumanMessage(query)]

# 调用应用程序

output = app.invoke(

{"messages": input_messages, "val": "世界!"},

config

)

# 打印输出

output["messages"][-1].pretty_print()

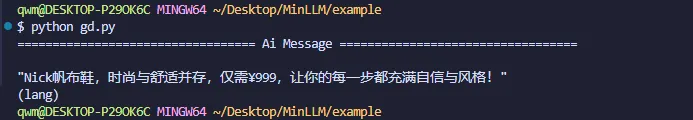

Demo1广告词生成小助手

pyimport os

from langchain_openai import ChatOpenAI

from typing import Sequence

from typing_extensions import TypedDict, Annotated

from langgraph.graph import add_messages

from langchain_core.messages import HumanMessage, AIMessage, BaseMessage

from langgraph.checkpoint.memory import MemorySaver

from langgraph.graph import START, MessagesState, StateGraph

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

# 设置 OpenAI API 密钥

os.environ["OPENAI_API_KEY"] = "xxxxxxxxxxxxxxxxxxxxxxxx"

class State(TypedDict):

messages: Annotated[Sequence[BaseMessage], add_messages]

product_name: str

price: str

# 初始化模型

model = ChatOpenAI(model="deepseek-chat", openai_api_base="https://api.deepseek.com")

# 定义一个新的图

workflow = StateGraph(state_schema=State)

# 定义提示模板

prompt = ChatPromptTemplate.from_messages(

[

("system", "你是广告酱,擅长生成吸引人的广告词。请根据以下商品名称和价格生成一个20-50字的广告词。"),

MessagesPlaceholder(variable_name="messages"),

("human", "商品名称: {product_name}"),

("human", "价格: {price}"),

]

)

# 定义调用模型的函数

def call_model(state: State):

chain = prompt | model

response = chain.invoke(state)

return {"messages": response}

# 定义图中的节点

workflow.add_edge(START, "model")

workflow.add_node("model", call_model)

# 添加内存

# memory = MemorySaver()

# app = workflow.compile(checkpointer=memory)

app = workflow.compile()

# 配置

config = {"configurable": {"thread_id": "abc123"}}

# 查询

query = "请生成一个广告词"

input_messages = [HumanMessage(query)]

# 调用应用程序

output = app.invoke(

{"messages": input_messages, "product_name": "Nick帆布鞋", "price": "¥999"},

config

)

# 打印输出

output["messages"][-1].pretty_print()

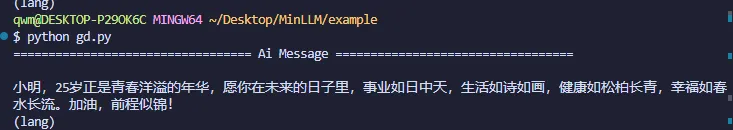

Demo2 祝福语生成助手

pyimport os

from langchain_openai import ChatOpenAI

from typing import Sequence

from typing_extensions import TypedDict, Annotated

from langgraph.graph import add_messages

from langchain_core.messages import HumanMessage, AIMessage, BaseMessage

from langgraph.checkpoint.memory import MemorySaver

from langgraph.graph import START, MessagesState, StateGraph

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

# 设置 OpenAI API 密钥

os.environ["OPENAI_API_KEY"] = "xxxxxxxxxxxxxxxxxxx"

class State(TypedDict):

messages: Annotated[Sequence[BaseMessage], add_messages]

name: str

age: int

gender: str

# 初始化模型

model = ChatOpenAI(model="deepseek-chat", openai_api_base="https://api.deepseek.com")

# 定义一个新的图

workflow = StateGraph(state_schema=State)

# 定义提示模板

prompt = ChatPromptTemplate.from_messages(

[

("system", "你是祝福酱,擅长生成温馨的祝福语。请根据以下人名、年龄和性别生成一个50字的祝福语。"),

MessagesPlaceholder(variable_name="messages"),

("human", "人名: {name}"),

("human", "年龄: {age}"),

("human", "性别: {gender}"),

]

)

# 定义调用模型的函数

def call_model(state: State):

chain = prompt | model

response = chain.invoke(state)

return {"messages": response}

# 定义图中的节点

workflow.add_edge(START, "model")

workflow.add_node("model", call_model)

# 添加内存

# memory = MemorySaver()

# app = workflow.compile(checkpointer=memory)

app = workflow.compile()

# 配置

config = {"configurable": {"thread_id": "abc123"}}

# 查询

query = "请生成一个祝福语"

input_messages = [HumanMessage(query)]

# 调用应用程序

output = app.invoke(

{"messages": input_messages, "name": "张燕海", "age": 21, "gender": "男"},

config

)

# 打印输出

output["messages"][-1].pretty_print()

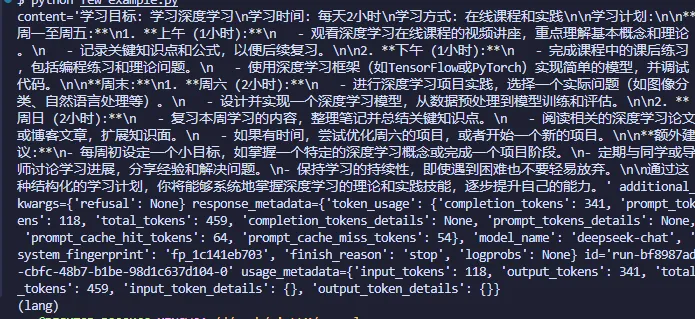

提示模板组合

demo1

pyimport os

from langchain_openai import ChatOpenAI

from langchain_core.prompts import PromptTemplate, PipelinePromptTemplate

from langchain_core.messages import HumanMessage

# 设置 OpenAI API 密钥

os.environ["OPENAI_API_KEY"] = "xxxxxxxxxxxxxxxxxxx"

# 初始化模型

model = ChatOpenAI(model="deepseek-chat", openai_api_base="https://api.deepseek.com")

# 定义最终提示模板

full_template = """{introduction}

{example}

{start}"""

full_prompt = PromptTemplate.from_template(full_template)

# 定义介绍部分的提示模板

introduction_template = """你是一个学习助手,专门为学生生成个性化的学习计划。"""

introduction_prompt = PromptTemplate.from_template(introduction_template)

# 定义示例部分的提示模板

example_template = """以下是一个示例学习计划:

学习目标: {example_goal}

学习时间: {example_time}

学习方式: {example_method}

学习计划: {example_plan}"""

example_prompt = PromptTemplate.from_template(example_template)

# 定义开始部分的提示模板

start_template = """现在,请为以下学习目标生成一个学习计划:

学习目标: {goal}

学习时间: {time}

学习方式: {method}

学习计划: """

start_prompt = PromptTemplate.from_template(start_template)

# 定义输入提示模板

input_prompts = [

("introduction", introduction_prompt),

("example", example_prompt),

("start", start_prompt),

]

# 创建PipelinePromptTemplate

pipeline_prompt = PipelinePromptTemplate(

final_prompt=full_prompt, pipeline_prompts=input_prompts

)

# 定义调用模型的函数

def generate_study_plan(goal: str, time: str, method: str, example_goal: str, example_time: str, example_method: str, example_plan: str):

formatted_prompt = pipeline_prompt.format(

goal=goal,

time=time,

method=method,

example_goal=example_goal,

example_time=example_time,

example_method=example_method,

example_plan=example_plan,

)

# 将 formatted_prompt 转换为 HumanMessage 列表

messages = [HumanMessage(content=formatted_prompt)]

response = model.invoke(messages)

return response

# 调用应用程序

goal = "学习深度学习"

time = "每天2小时"

method = "在线课程和实践"

example_goal = "学习Python编程"

example_time = "每天2小时"

example_method = "在线课程"

example_plan = "每天观看Python编程的在线课程,完成课后练习,并在周末进行项目实践。"

study_plan = generate_study_plan(goal, time, method, example_goal, example_time, example_method, example_plan)

# 打印输出

print(study_plan)

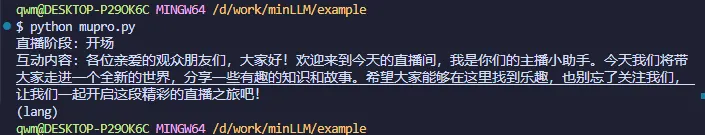

demo2直播助手

pyimport os

from langchain_openai import ChatOpenAI

from langchain_core.prompts import PromptTemplate, PipelinePromptTemplate

from langchain_core.messages import HumanMessage

# 设置 OpenAI API 密钥

os.environ["OPENAI_API_KEY"] = "xxxxxxxxxxxxxxxxxxxxxxx"

# 初始化模型

model = ChatOpenAI(model="deepseek-chat", openai_api_base="https://api.deepseek.com")

# 定义最终提示模板

full_template = """{introduction}

{example}

{start}"""

full_prompt = PromptTemplate.from_template(full_template)

# 定义介绍部分的提示模板

introduction_template = """你是一个直播智能助手,专门为直播场景生成互动内容。"""

introduction_prompt = PromptTemplate.from_template(introduction_template)

# 定义示例部分的提示模板

example_template = """以下是一个示例直播互动内容:

直播阶段: 开场

互动内容: 大家好!欢迎来到今天的直播,我是你们的主播小助手。今天我们将一起探索一个有趣的话题,希望大家能积极参与互动!

直播阶段: 中场

互动内容: 现在我们已经进行到一半了,大家有什么问题或者想法吗?欢迎在评论区留言,我会尽量回答大家的问题。

直播阶段: 结束

互动内容: 今天的直播就要结束了,非常感谢大家的参与和支持!如果喜欢我们的内容,请记得点赞和订阅,我们下次直播再见!"""

example_prompt = PromptTemplate.from_template(example_template)

# 定义开始部分的提示模板

start_template = """现在,请为以下直播阶段生成互动内容:

直播阶段: {stage}

互动内容: """

start_prompt = PromptTemplate.from_template(start_template)

# 定义输入提示模板

input_prompts = [

("introduction", introduction_prompt),

("example", example_prompt),

("start", start_prompt),

]

# 创建PipelinePromptTemplate

pipeline_prompt = PipelinePromptTemplate(

final_prompt=full_prompt, pipeline_prompts=input_prompts

)

# 定义调用模型的函数

def generate_interaction_content(stage: str):

formatted_prompt = pipeline_prompt.format(stage=stage)

# 将 formatted_prompt 转换为 HumanMessage 列表

messages = [HumanMessage(content=formatted_prompt)]

response = model.invoke(messages)

return response[0].content

# 调用应用程序

stage = "开场"

interaction_content = generate_interaction_content(stage)

# 打印输出

print(interaction_content)

流式输出

langchain的流式API也变得简单易用

pyimport os

from langchain_openai import ChatOpenAI

from langchain_core.messages import HumanMessage, AIMessage

# 设置 OpenAI API 密钥

os.environ["OPENAI_API_KEY"] = "xxxxxxxxxxxxxxxxxxxxx"

# 初始化模型

model = ChatOpenAI(model="deepseek-chat",openai_api_base="https://api.deepseek.com")

from langgraph.checkpoint.memory import MemorySaver

from langgraph.graph import START, MessagesState, StateGraph

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

import time

# Define a new graph

workflow = StateGraph(state_schema=MessagesState)

# Define the prompt

prompt = ChatPromptTemplate.from_messages(

[

("system", "你是代码酱,最喜欢说日语和写Golang,但你是个中国女孩,只是会调皮的说一些日语网络词,是个Golang迷."),

MessagesPlaceholder(variable_name="messages")

]

)

# Define the function that calls the model

def call_model(state: MessagesState):

chain = prompt | model

response = chain.invoke(state["messages"])

return {"messages": response}

# Define the (single) node in the graph

workflow.add_edge(START, "model")

workflow.add_node("model", call_model)

# Add memory

memory = MemorySaver()

app = workflow.compile(checkpointer=memory)

config = {"configurable": {"thread_id": "abc123"}}

query = "Hi I'm Todd, please tell me a joke."

input_messages = [HumanMessage(query)]

for chunk, metadata in app.stream(

{"messages": input_messages},

config,

stream_mode="messages",

):

if isinstance(chunk, AIMessage): # Filter to just model responses

print(chunk.content, end="|")

简单的流式输出

py'''

Author: yowayimono

Date: 2024-11-26 20:54:48

LastEditors: yowayimono

LastEditTime: 2024-11-26 21:15:04

Description: nothing

'''

from pydantic import BaseModel, Field

from langchain_core.tools import tool

import os

# from langchain_core.messages import HumanMessage, AIMessage

from langchain_openai import ChatOpenAI

from langchain_core.messages import HumanMessage, AIMessage,SystemMessage

# 设置 OpenAI API 密钥

os.environ["OPENAI_API_KEY"] = "xxxxxxx"

# 初始化模型

llm = ChatOpenAI(model="deepseek-chat",openai_api_base="https://api.deepseek.com")

for chunk in llm.stream([SystemMessage("你是代码酱,一个喜欢日语和Go语言编程的女大学生,喜欢跟人聊天!!!"),HumanMessage("讲一个笑话!")]):

if isinstance(chunk, AIMessage): # Filter to just model responses

print(chunk.content, end="\n")

内存

工具调用

Demo1直播助手

pyimport os

from langchain_openai import ChatOpenAI

from langchain_core.tools import tool

from langchain_core.messages import HumanMessage, AIMessage, ToolMessage

from datetime import datetime

# 设置 OpenAI API 密钥

os.environ["OPENAI_API_KEY"] = "xxxxxxxxxxxxxxxxxxxxxxxx"

# 初始化模型

llm = ChatOpenAI(model="deepseek-chat", openai_api_base="https://api.deepseek.com")

# 定义工具函数

@tool

def get_current_time() -> str:

"""获取当前时间。"""

return datetime.now().strftime("%Y-%m-%d %H:%M:%S")

@tool

def get_live_room_viewers() -> int:

"""获取直播间人数。"""

# 这里可以替换为实际的获取直播间人数的逻辑

return 1000

# 定义工具列表

tools = [get_current_time, get_live_room_viewers]

# 绑定工具到模型

llm_with_tools = llm.bind_tools(tools)

# 定义调用模型的函数

def generate_interaction_content(stage: str):

# 初始化消息列表

messages = [HumanMessage(content=f"现在,请为以下直播阶段生成互动内容:\n\n直播阶段: {stage}\n互动内容: ")]

# 调用模型生成工具调用

ai_msg = llm_with_tools.invoke(messages)

messages.append(ai_msg)

# 调用工具函数并获取结果

for tool_call in ai_msg.tool_calls:

selected_tool = {"get_current_time": get_current_time, "get_live_room_viewers": get_live_room_viewers}[tool_call["name"].lower()]

tool_msg = selected_tool.invoke(tool_call)

messages.append(tool_msg)

# 将工具结果传递给模型生成最终的互动内容

final_response = llm_with_tools.invoke(messages)

return final_response.content

# 调用应用程序

stage = "开场"

interaction_content = generate_interaction_content(stage)

# 打印输出

print(interaction_content)

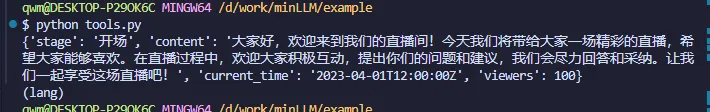

输入输出

输出json

pyimport os

from langchain_openai import ChatOpenAI

from pydantic import BaseModel, Field

from typing import Optional

# 设置 OpenAI API 密钥

os.environ["OPENAI_API_KEY"] = "xxxxxxxxxxxxxx"

# 初始化模型

llm = ChatOpenAI(model="deepseek-chat", openai_api_base="https://api.deepseek.com")

# 定义输出模型

class InteractionContent(BaseModel):

"""直播互动内容。"""

stage: str = Field(description="直播阶段")

content: str = Field(description="互动内容")

current_time: str = Field(description="当前时间")

viewers: int = Field(description="直播间人数")

# 使用 with_structured_output 方法

structured_llm = llm.with_structured_output(InteractionContent)

# 定义调用模型的函数

def generate_interaction_content(stage: str) -> InteractionContent:

# 调用模型生成结构化输出

interaction_content = structured_llm.invoke(f"现在,请为以下直播阶段生成互动内容:\n\n直播阶段: {stage}\n互动内容: ")

return interaction_content

# 调用应用程序

stage = "开场"

interaction_content = generate_interaction_content(stage)

# 打印输出

print(interaction_content.model_dump_json(indent=2))

json

pyimport os

from getpass import getpass

# 设置 OpenAI API 密钥

if "OPENAI_API_KEY" not in os.environ:

os.environ["OPENAI_API_KEY"] = getpass()

from langchain_core.output_parsers import JsonOutputParser

from langchain_core.prompts import PromptTemplate

from langchain_openai import ChatOpenAI

from pydantic import BaseModel, Field

# 初始化模型

model = ChatOpenAI(

model="google/gemini-2.0-flash-lite-preview-02-05:free",

openai_api_base="https://openrouter.ai/api/v1",

temperature=0.7,

max_tokens=500,

)

# 定义期望的数据结构

class Joke(BaseModel):

setup: str = Field(description="笑话的开头问题")

punchline: str = Field(description="笑话的结尾答案")

# 定义查询

joke_query = "Tell me a joke."

# 设置解析器并将指令注入提示模板

parser = JsonOutputParser(pydantic_object=Joke)

prompt = PromptTemplate(

template="Answer the user query.\n{format_instructions}\n{query}\n",

input_variables=["query"],

partial_variables={"format_instructions": parser.get_format_instructions()},

)

# 构建链

chain = prompt | model | parser

# 调用链

result = chain.invoke({"query": joke_query})

print(result)

代理

新版langchain使用langgraph来使用agent

pyimport os

from langchain_openai import ChatOpenAI

from langchain_core.tools import tool

from langgraph.prebuilt import create_react_agent

# 设置 OpenAI API 密钥

os.environ["OPENAI_API_KEY"] = "xxxxxxxxxxxxxxxxxxxxxxxxxxx"

# 初始化模型

model = ChatOpenAI(model="deepseek-chat", openai_api_base="https://api.deepseek.com")

# 定义工具函数

@tool

def magic_function(input: int) -> int:

"""Applies a magic function to an input."""

return input + 2

# 定义工具列表

tools = [magic_function]

# 创建 LangGraph 代理执行器

langgraph_agent_executor = create_react_agent(model, tools)

# 定义调用代理的函数

def query_agent(query: str):

# 初始化消息列表

messages = langgraph_agent_executor.invoke({"messages": [("human", query)]})

return messages["messages"][-1].content

# 调用代理

query = "what is the value of magic_function(3)?"

response = query_agent(query)

# 打印输出

print(response)

demo1 营销Agent

pyimport os

from langchain_openai import ChatOpenAI

from langchain_core.tools import tool

from langgraph.prebuilt import create_react_agent

from pydantic import BaseModel, Field

from typing import List

# 设置 OpenAI API 密钥

os.environ["OPENAI_API_KEY"] = "xxxxxxxxxxxxxxxxxxxxxx"

# 初始化模型

model = ChatOpenAI(model="deepseek-chat", openai_api_base="https://api.deepseek.com")

# 定义工具函数

@tool

def generate_marketing_strategy(product: str, target_audience: str) -> str:

"""为给定的产品和目标受众生成营销策略。"""

return f"针对{product},目标受众为{target_audience}的营销策略:使用社交媒体广告、网红合作和电子邮件营销来触达目标受众。"

@tool

def analyze_competitors(product: str) -> str:

"""分析给定产品的竞争对手并提供见解。"""

return f"针对{product}的竞争对手分析:竞争对手正在使用类似的营销策略。建议通过突出产品的独特功能来区分你的产品。"

@tool

def estimate_budget(product: str, target_audience: str) -> float:

"""估算给定产品和目标受众的营销预算。"""

return 5000.0

# 定义工具列表

tools = [generate_marketing_strategy, analyze_competitors, estimate_budget]

# 创建 LangGraph 代理执行器

langgraph_agent_executor = create_react_agent(model, tools)

# 定义调用代理的函数

def query_agent(query: str):

# 初始化消息列表

messages = langgraph_agent_executor.invoke({"messages": [("human", query)]})

return messages["messages"][-1].content

# 调用代理

query = "为一款新的智能手机生成针对年轻专业人士的营销策略。"

response = query_agent(query)

# 打印输出

print(response)

Demo2 代码助手(使用prompt)

新版在agent使用prompt方法如下

pyimport os

from langchain_openai import ChatOpenAI

from langchain_core.tools import tool

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

from langgraph.prebuilt import create_react_agent

from langchain_core.messages import SystemMessage

from pydantic import BaseModel, Field

from typing import List

# 设置 OpenAI API 密钥

os.environ["OPENAI_API_KEY"] = "xxxxxxxxxxxxxxxxxxxx"

# 初始化模型

model = ChatOpenAI(model="deepseek-chat", openai_api_base="https://api.deepseek.com")

# 定义工具函数

@tool

def generate_code_snippet(language: str, task: str) -> str:

"""生成特定编程语言的代码片段。"""

return f"以下是使用{language}编写的{task}代码片段:\n\n```{language}\n# 代码片段\n```"

@tool

def analyze_code_quality(code: str) -> str:

"""分析代码质量并提供改进建议。"""

return f"代码质量分析:\n\n{code}\n\n建议:优化代码结构,增加注释以提高可读性。"

@tool

def estimate_development_time(task: str) -> float:

"""估算完成特定任务的开发时间。"""

return 8.0

# 定义工具列表

tools = [generate_code_snippet, analyze_code_quality, estimate_development_time]

# 创建 LangGraph 代理执行器

system_message = "你是一个软件开发助手,专门为开发者生成代码片段、分析代码质量和估算开发时间。请用中文回答。"

langgraph_agent_executor = create_react_agent(

model, tools, state_modifier=system_message

)

# 定义调用代理的函数

def query_agent(query: str):

# 初始化消息列表

messages = langgraph_agent_executor.invoke({"messages": [("human", query)]})

return messages["messages"][-1].content

# 调用代理

query = "生成一个使用Python编写的文件读取代码片段,并分析代码质量。"

response = query_agent(query)

# 打印输出

print(response)

Demo3 文学小女生,虚拟人物

pyimport os

from langchain_openai import ChatOpenAI

from langchain_core.tools import tool

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

from langgraph.prebuilt import create_react_agent

from langgraph.checkpoint.memory import MemorySaver

from langchain_core.messages import SystemMessage

from pydantic import BaseModel, Field

from typing import List

from datetime import datetime

# 设置 OpenAI API 密钥

os.environ["OPENAI_API_KEY"] = "xxxxxxxxxxxxxx"

# 初始化模型

model = ChatOpenAI(model="deepseek-chat", openai_api_base="https://api.deepseek.com")

# 定义工具函数

@tool

def get_username(name: str) -> str:

"""获取用户名。"""

return f"用户名是:{name}"

@tool

def get_current_time() -> str:

"""获取当前时间。"""

return datetime.now().strftime("%Y-%m-%d %H:%M:%S")

@tool

def get_user_affection(name: str) -> int:

"""获取用户好感度。"""

return 5 # 假设初始好感度为5

# 定义工具列表

tools = [get_username, get_current_time, get_user_affection]

# 创建 LangGraph 代理执行器

system_message = "你是一个16岁的高中生,喜欢文学。请用中文回答。"

memory = MemorySaver()

langgraph_agent_executor = create_react_agent(

model, tools, state_modifier=system_message, checkpointer=memory

)

# 定义调用代理的函数

def query_agent(query: str, config: dict):

# 初始化消息列表

messages = langgraph_agent_executor.invoke({"messages": [("human", query)]}, config)

return messages["messages"][-1].content

# 配置

config = {"configurable": {"thread_id": "test-thread"}}

# 多轮对话

print(query_agent("你好,我叫小明。", config))

print("---")

print(query_agent("你最近在读什么书?", config))

print("---")

print(query_agent("你能推荐一些文学作品吗?", config))

print("---")

print(query_agent("你还记得我的名字吗?", config))

print("---")

print(query_agent("你能告诉我当前时间吗?", config))

print("---")

print(query_agent("你能告诉我我的好感度吗?", config))

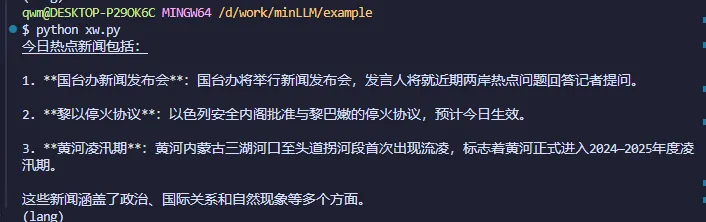

demo 新闻小助手

pythonimport os

from langchain_openai import ChatOpenAI

from langchain_core.tools import tool

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

from langgraph.prebuilt import create_react_agent

from langgraph.checkpoint.memory import MemorySaver

from langchain_core.messages import SystemMessage

from pydantic import BaseModel, Field

from typing import List

from datetime import datetime

from langchain_community.tools import DuckDuckGoSearchResults

from langchain_community.utilities import DuckDuckGoSearchAPIWrapper

# 设置 OpenAI API 密钥

os.environ["OPENAI_API_KEY"] = "xxxxxxxxxxxxxxxxxxx"

# 初始化模型

model = ChatOpenAI(model="deepseek-chat", openai_api_base="https://api.deepseek.com")

# 定义工具函数

@tool

def get_username(name: str) -> str:

"""获取用户名。"""

return f"用户名是:{name}"

@tool

def get_current_time() -> str:

"""获取当前时间。"""

return datetime.now().strftime("%Y-%m-%d %H:%M:%S")

@tool

def get_user_affection(name: str) -> int:

"""获取用户好感度。"""

return 5 # 假设初始好感度为5

# 定义 DuckDuckGo 搜索工具

wrapper = DuckDuckGoSearchAPIWrapper(region="us-en", time="d", max_results=5)

duckduckgo_tool = DuckDuckGoSearchResults(api_wrapper=wrapper, source="news")

# 定义工具列表

tools = [get_username, get_current_time, get_user_affection, duckduckgo_tool]

# 创建 LangGraph 代理执行器

system_message = "你是一个新闻助手,负责总结今日新闻。请用中文回答。"

memory = MemorySaver()

langgraph_agent_executor = create_react_agent(

model, tools, state_modifier=system_message, checkpointer=memory

)

# 定义调用代理的函数

def query_agent(query: str, config: dict):

# 初始化消息列表

messages = langgraph_agent_executor.invoke({"messages": [("human", query)]}, config)

return messages["messages"][-1].content

# 配置

config = {"configurable": {"thread_id": "test-thread"}}

# 多轮对话

print(query_agent("你好,我叫小明。", config))

print("---")

print(query_agent("你能告诉我当前时间吗?", config))

print("---")

print(query_agent("你能告诉我我的好感度吗?", config))

print("---")

print(query_agent("你能总结一下今日的新闻吗?", config))

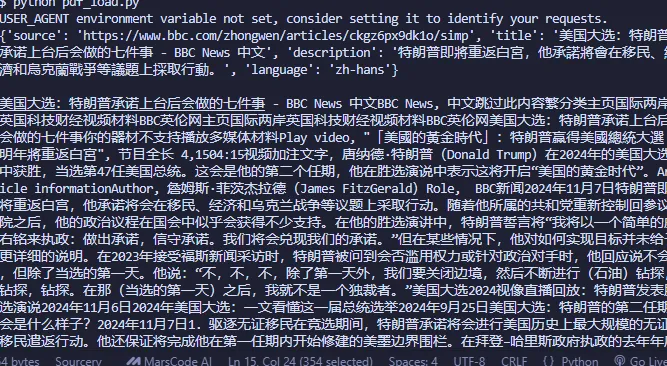

文档加载

html加载

pyimport bs4

from langchain_community.document_loaders import WebBaseLoader

page_url = "https://www.bbc.com/zhongwen/articles/ckgz6px9dk1o/simp"

loader = WebBaseLoader(web_paths=[page_url])

docs = []

for doc in loader.lazy_load():

docs.append(doc)

assert len(docs) == 1

doc = docs[0]

print(f"{doc.metadata}\n")

print(doc.page_content)

本文作者:yowayimono

本文链接:

版权声明:本博客所有文章除特别声明外,均采用 BY-NC-SA 许可协议。转载请注明出处!

目录